Med Tech

We were recently award a Critical Technologies grant to perform a feasibility study integrating 3D printing, 3D scanning, and commercially available...

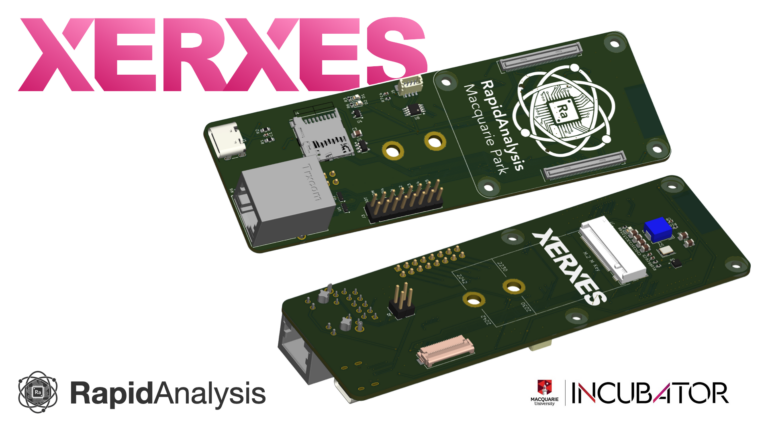

Embedded Device Development

We develop in our own Edge Development environment using RapidAnalysis hardware and cloud software to provide AI and API Services. RapidAnalysis...

Machine Learning

We use Jupyter Notebooks and JupyterLab for all our Machine Learning deliverables. Please continue on for a brief overview of how...

Re-purpose old CPUs for new computational tasks in the AI era

A quiet revolution is taking place in computing where single board computers (SBC), which in the past were mainly used for...

SoftMatch: Measuring gaze

An innovative way to examine bottom-up sensory influencers. What is eye-tracking? Eye tracking is a technology that involves monitoring and analyzing...